Towards large-scale evaluation of behavioral states in wild understudied King vultures (Sarcoramphus papa)

Enzo Basso 1), Chris Beirne 1), Kyle Luthy 2), Tobias Petri 3)

1) OSA Conservation – Tropical Movement Lab

2) Wake Forest University

3) Schäuffelhut Berger GmbH – Firetail

Challenges for evaluating wild animal predictions

In last year’s newsletter, we provided this community with a short primer on the joint work of the Osa Conservation (https://osaconservation.org/) and the Firetail team (www.firetail.de), explaining our approaches to the prediction of behavioral states by acceleration data recorded for wild King vultures in Costa Rica.

Figure 1: King vulture with mounted camera

Here, we provide an update to this work. In particular, we discuss our solutions towards a deeper understanding of the captured data and means to evaluate predictions for these animals.

Capturing Synchronized Data

Obtaining gold-standards to assess behavioral predictions for flight-capable animals in inaccessible areas is a major challenge. Direct observations over long periods are typically not possible. Also, tags will usually capture acceleration data in non-consecutive time intervals of specified length (bursts).

Any visual confirmation of behavior therefore requires sufficient overlap with these segments. Animal-mounted cameras with time-controlled recording seem like a natural choice in our setting, but the amount of data produced does not typically allow for a remote collection.

To tackle these challenges and to obtain high-quality synchronized video footage for a side-by-side

comparison of gold-standard video data and acceleration-based predictions, we devised a drop-off

camera system that perfectly embeds with our e-obs tags in collaboration with Kyle Luthy and his

team at Wake Forest. The backpack element was approx. 3.7% of the animals’ mass (143g).

The system was set to release the payload after one week, during nighttime roost and within a defined geofenced region. We successfully deployed the system for three adult animals using a modified walk-in box trap. One system malfunctioned and was released too soon. The two remaining systems provided data as planned.

Annotating the Recorded Data

The system was set to record videos of 10 seconds length and 10 seconds of 20 Hz acceleration bursts every 5 minutes. This would ensure a sufficient amount of overlap among the data sources for downstream evaluation. Overall, we could capture 460 video sequences spanning 1h 21m 13s. These sequences were then processed using BORIS (https://www.boris.unito.it), assigning six behavioral patterns (drinking, feeding, flapping, preening, resting, soaring/gliding) to the video footage.

Acceleration vs Gold Standards

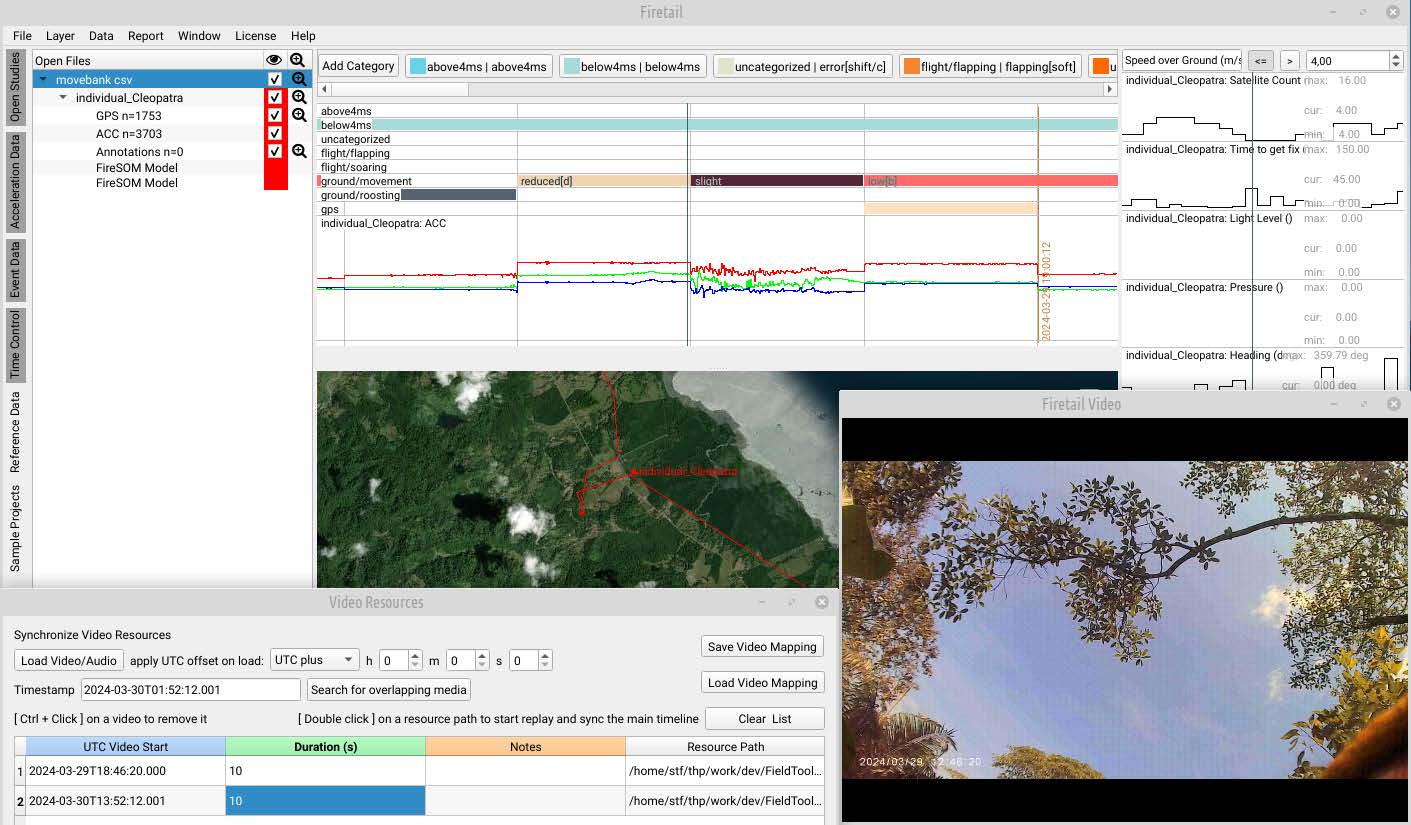

We imported the BORIS data in Firetail using the readily available interface, thereby overlaying the assigned categories from the video data and the visual representation of the acceleration patterns (XYZ axis).

This allowed us to assess typical acceleration patterns in the context of both annotations and video footage. We also developed a new module for Firetail to embed video/audio/image data in the other available data sources.

We can now immediately inspect predicted categories, gold-standard ethogram annotations, acceleration patterns, GPS data, sensor data alongside the visual confirmation provided by the video data. Together with the working prototype camera mount and the established e-obs tags’ solutions, we were able to evaluate and visualize obtained data much faster and in full context.

Outlook

The prototype video capturing setup with our novel drop-off mechanism met our objectives well and seems to be exactly the tool to boost our understanding of King Vulture behavioral patterns on a large scale. Together with Firetail’s new media synchronization module and built-in validation procedures we now have a powerful set of tools at hand to scale our initial experiments to the complete set of about 70 monitored animals in Costa Rica and Perú in 2025.

The validated patterns will also provide a robust basis to improve the precision of Firetail’s predictive FireSOM system and possibly pave the road towards supervised prediction models as well. At the population level the results will provide valuable input for the derivation of social networks and state-space models.

Acknowledgements

We thank our wonderful partnering teams at Wake Forest University for the drop-off design, at National Geographic for camera design and our incredible team of field workers, researchers and data analysts.

This work was funded by Gordon and Betty Moore Foundation and e-obs Wildlife Telemetry

Contact:

Tobias Petri, thp@firetail.de

Kyle Luthy, luthyka@wfu.edu

Chris Beirne, chrisbeirne@osaconservation.org